Hai,

I am trying to calculate positron parameters for carbon vacancy in 3C-SiC with 215 atom supercell.

I get “Segmentation fault” error while starting positron SCF after converging electron SCF.

For positron SCF, one of the Dij value is found to be >50.

But 8 atom unit cell and 64 atom supercell calculation successfully completed both steps even with same >50 Dij value.

Since potentials are generated from the JTH input files, I have not tried the suggestion given by abinit to check potentials.

thanks

-rajaraman

log.abi (260.8 KB)

sic3c_333_1vc_relax_lt.abi (12.5 KB)

Dear Rajaraman,

Hard to say what could be the problem from a Segmentation fault error in the middle of the calculation…

Could it be that you are running out of memory (i.e. RAM) at some point? This typically gives such Segementation fault error.

Best wishes,

Eric

Thank you Eric for your clues.

It is possible that it can be memory issue. But I doubt so.

Our cluster is 400 node setup.

Each cluster node is powered by 64-bit Intel Xeon dual twelve-core processor with Ivy Bridge architecture of clock speed of 2.7 GHz.

Each node has 128GB DDR3 Fully Buffered Memory

but why it fails consistently after completing electron SCFs and starting on positron SCFs?

I can understand the positron part is not active due to limited use over new versions.

Can you please suggest possible debug options?

I mean I can recompile so as to pin down the error

Thanks

-rajaraman

OK, 10 Gb per CPU sounds indeed large but 215 atom supercell is a big cell such that it could go beyond this limit of 10 Gb per core (I never did a calculation of positron so I don’t know how much it requires exactly), mostly that you have tested with less atoms in the cell and it worked. Hence, I still suspect it is a RAM memory problem.

How much RAM per core did you put in your calculation and how was it distributed?

Eric

Thanks Eric

will run and give dynamic memory usage per/CPU as our cluster is down for maintenance.

But here is the snapshot from log of failed run I posted

memory: analysis of memory needs

Values of the parameters that define the memory need of the present run

intxc = 0 ionmov = 2 iscf = 17 lmnmax = 8

lnmax = 4 mgfft = 108 mpssoang = 2 mqgrid = 9040

natom = 215 nloc_mem = 2 nspden = 1 nspinor = 1

nsppol = 1 nsym = 24 n1xccc = 1 ntypat = 2

occopt = 1 xclevel = 1

- mband = 540 mffmem = 1 mkmem = 2

mpw = 2344 nfft = 419904 nkpt = 4

Pmy_natom= 12

PAW method is used; the additional fine FFT grid is defined by:

mgfftf= 192 nfftf = 2359296

================================================================================

P This job should need less than 780.359 Mbytes of memory.

Rough estimation (10% accuracy) of disk space for files :

_ WF disk file : 231.770 Mbytes ; DEN or POT disk file : 18.002 Mbytes.

================================================================================

Biggest array : f_fftgr(disk), with 288.0020 MBytes.

memana : allocated an array of 288.002 Mbytes, for testing purposes.

memana: allocated 780.359Mbytes, for testing purposes.

The job will continue.

Hello Rajaraman,

I saw your log where I confirmed that there is no explicit error message from Abinit…

The memory analysis reported by Abinit is not trustable, this is even more true for PAW calculations, so you cannot rely on it (it’ll be removed in a future version). Another thing is that this estimation is made for ground state and not for the positron calculation where it is crashing. Additionally, depending on the operating system installed on the machine, extra memory is used by the machine on the top of the MPI ones (can go around 500 Mb per core).

Best wishes,

Eric

Dear Eric,

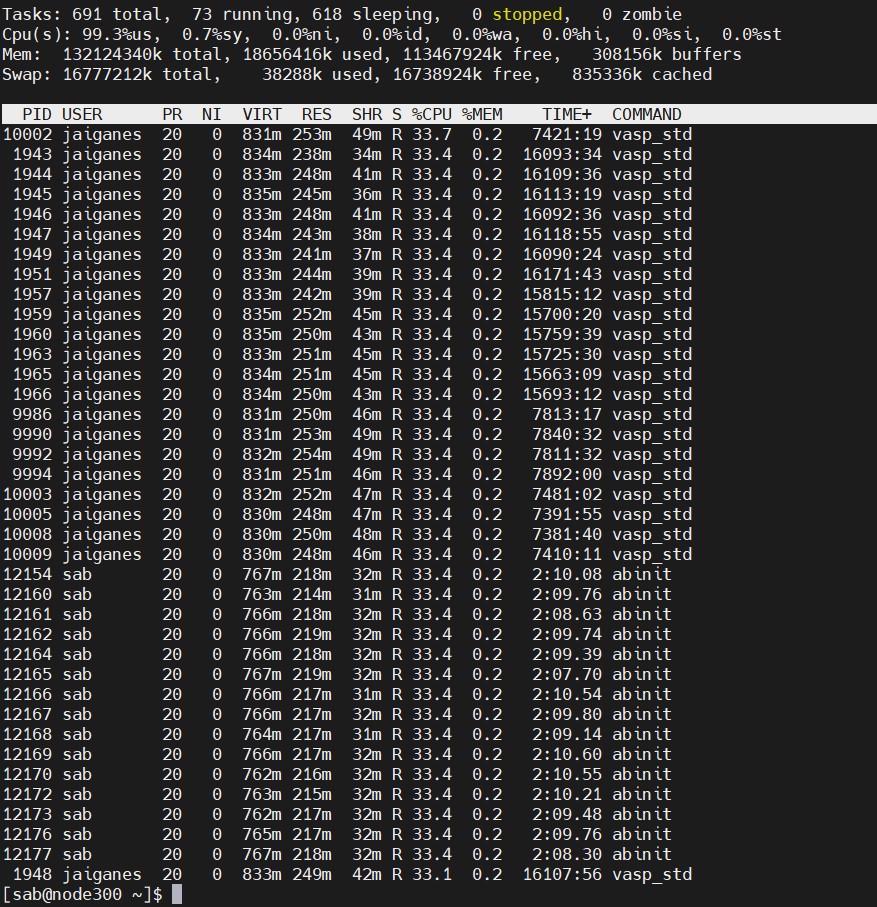

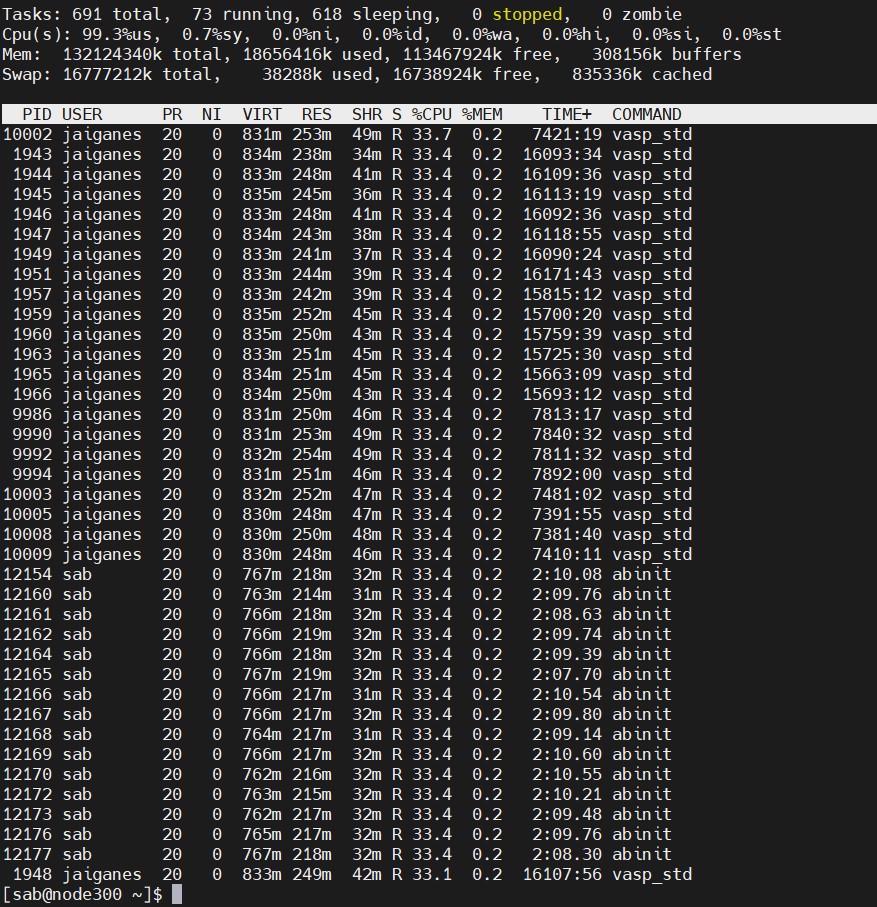

here is snapshot of “top” for one of the node running abinit calculation.

As I see it is only 18GB memory is used out of 132GB.

I can watch at the time of failure.

that is why I asked for possible recompilation with debug mode.

thanks

-rajaraman

Thank you for the top report, it’ll indeed be interesting to see how it goes once it crashes as it is the time when it runs the positron calculation…

There is the comment you mentioned that appears in the log just before the crash:

pawio_print_ij: WARNING -

Though, this should correspond to a bad convergence rather than crash…

Eric

Dear Eric,

yes, i watched “top” when it crashed.

jobs just disappeared.

i did not see any unusual RAM activity.

may be those familer with code will help to pin what steps were taken when it switches to positron SCF

thanks

-rajaraman

OK, in the mean time the developer responds to it, I figured out that you also asked for compil flags to get more details. You can recompile by adding the compil flag “fno-backtrace”, but do a separate compilation as this version with backtrace might run slowly.

Eric

Thank you Eric.

I will get back with what backtrace throwsup.

-rajaraman

Dear Eric,

Thanks for the clues on compiling with debugging options.

instead of debugging compile, I tried to start with tutorial files of Silicon and went for 218 atom supercell.

it worked fine!

comparison of input files revealed that I was using default options for printing wf & eig

Forcing them not to print did the trick.

prtwf 0

prteig 0

We don’t need printout out for positron runs (though we use density prints for visualizations)

Thanks for your help and I learnt a bit about debugging.

-rajaraman

OK, great, I’m glad you found the problem.

Thank you for the feedback!

Best wishes,

Eric